Before you start

This guide assumes you have already launched a JupyterLab workspace, as described in the Transformations Overview.Environment variables

There are several environment variables that will be available when a transformation script runs in hotglue during a job:| Environment Variable | Description |

|---|---|

TENANT | ID of the tenant (test-user) |

FLOW | ID of the flow (FZev7QqK-) |

ENV_ID | ID of the hotglue environment (prod.hg.example.com) |

JOB_ID | ID of the job (ZVonkl) |

API_KEY | Environment API key (XXXX) |

API_URL | URL of the hotglue API (https://api.hotglue.com) |

JOB_ROOT | A unique key marking the root directory for the job (cust_1/flows/FZev7QqK-/jobs/2024/07/4/25/17/ZVonkl) |

SYNC_TYPE | Type of sync (incremental_sync, auto_sync, or full_sync) |

JOB_TYPE | Type of the job, in V2 flows (write or read) |

CONNECTOR_ID | ID of the connector, in V2 flows (salesforce) |

TAP | The connector managing the sync process, in both V1 and V2 flows (api) |

TARGET | The connector managing the export, in both V1 and V2 flows (salesforce) |

os.environ:

Python

Standard directories

In hotglue, there are three standard directories:| Directory Path | Description |

|---|---|

sync-output | This directory contains all input data from the data source (typically Parquet or CSV). |

snapshots | This directory can be used to store (snapshot) any data for the current tenant. It also stores the tenant-config.json used for tenant-specific settings. (typically JSON, Parquet, or CSV). |

etl-output | This directory is where you should put all output data, formatted as needed for the target your flow is using (typically a data.singer file). |

The Working Directory

When hotglue executes your ETL script, it provides a structured working directory with all the necessary files and data for your transformation. You should reproduce this directory structure when developing scripts locally.Directory Structure Overview

📄 Configuration Files

The key JSON configuration files available at runtime by your ETL script are:- catalog.json - Schema catalog for the data

- source-config.json - Source system credentials and configuration

- target-config.json - Target system credentials and configuration

- target-catalog.json - Target schema catalog (V2 write jobs only)

- source-state.json - Incremental sync state and bookmarks

- state.json - Current job execution state

tenant-config.json, on the other hand, is accessed from the snapshots directory. If you need to read or modify this object, your code will look something like below:

Example: Read the data

To start, we will import pandas and the gluestick Python package, and establish the standard input/output directories above. Note:snapshots are optional, and may not be needed for your scripts.

etl.ipynb

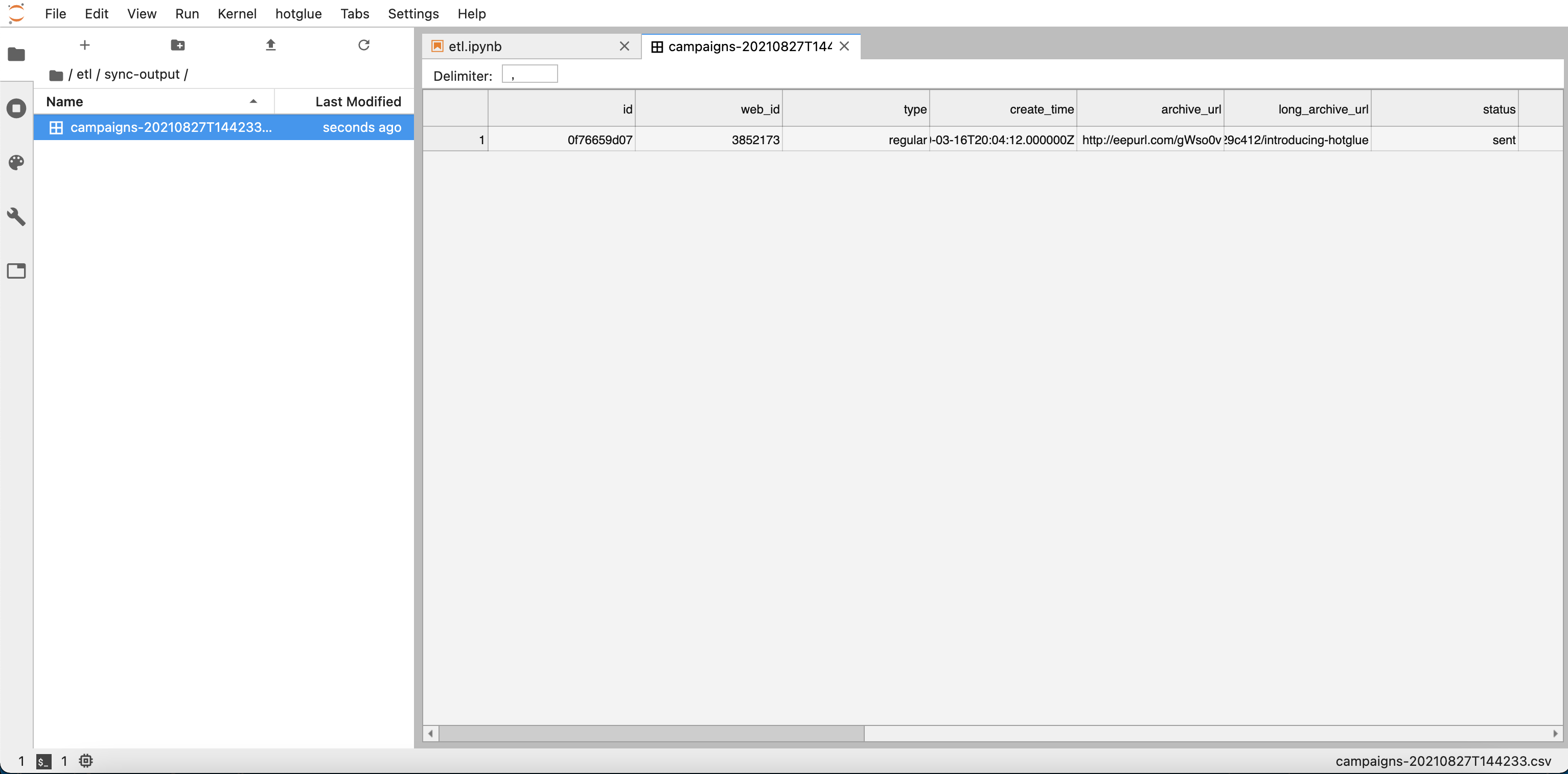

sync_output folder contains a CSV file called campaigns. Learn more how to get sample data in the Debugging a script section.

Now we can go ahead and read the data in

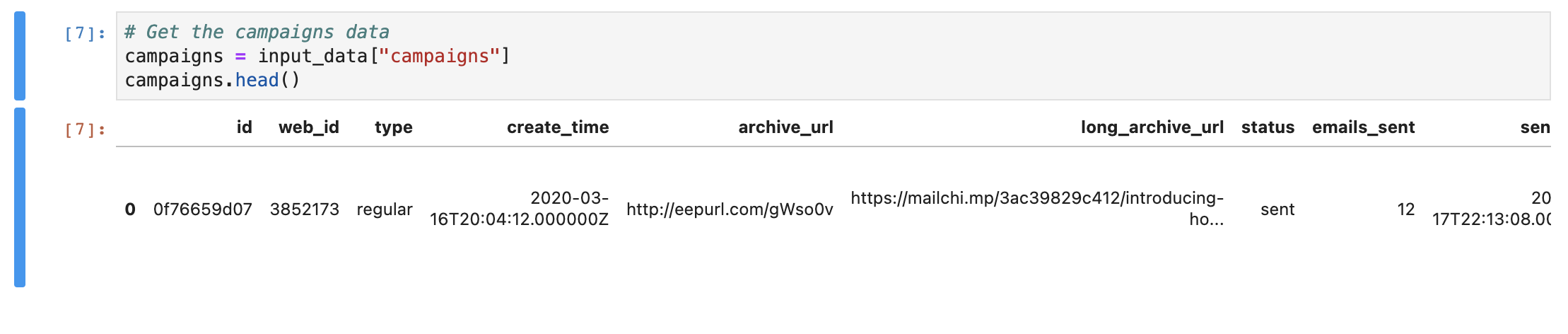

Now we can go ahead and read the data in INPUT_DIR with gluestick’s reader function.

etl.ipynb

campaigns data as follows:

etl.ipynb

campaigns.head() we can preview the data

Manipulate the data

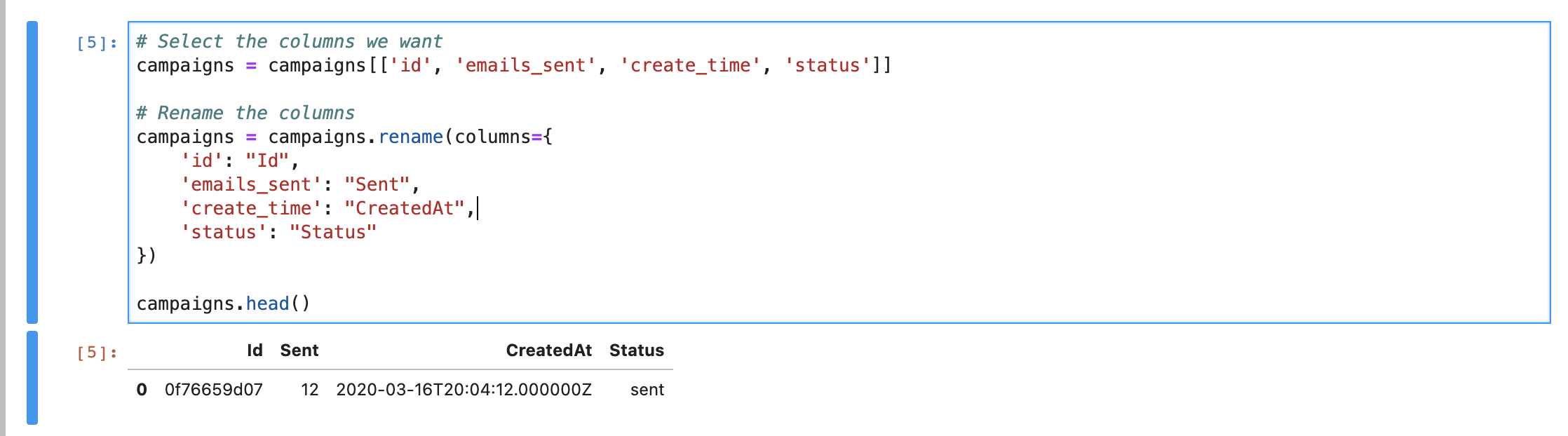

Now that you have the data as a pandas DataFrame, you can do any transformations you need to. For this example, we will select a few of the columns:id, emails_sent, create_time, and status, and then rename them.

etl.ipynb

Write the output data

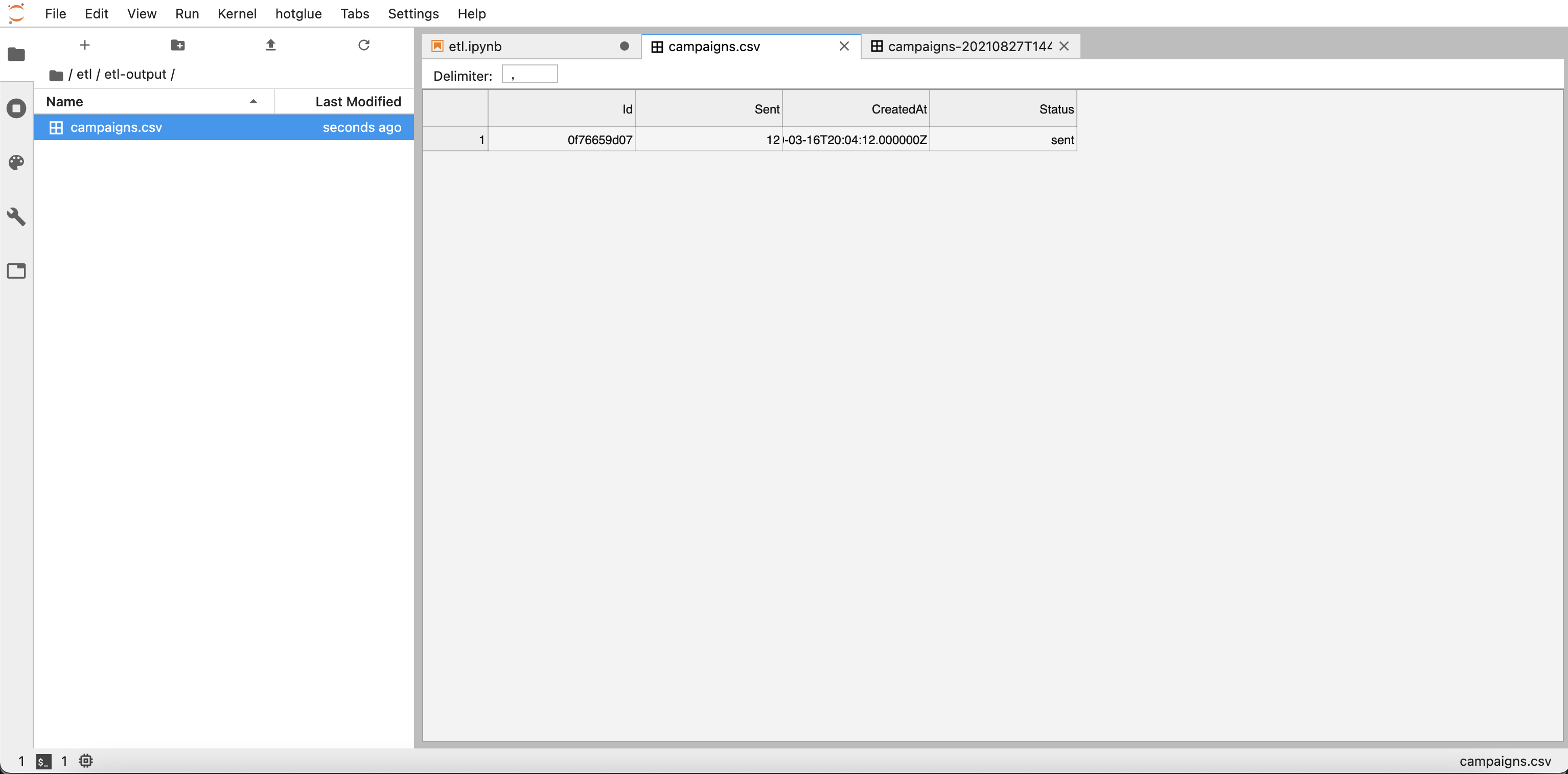

Finally, we can write the output to the standardOUTPUT_DIR using pandas to_csv function:

etl.ipynb

etl-output directory:

If you want to write this data to a non-filestore target, such as a database, CRM, or any other standard Singer target, you can use Gluestick’s

If you want to write this data to a non-filestore target, such as a database, CRM, or any other standard Singer target, you can use Gluestick’s to_singer function in lieu of to_csv:

etl.ipynb